This article is part of a series on the TOGETHER trial. More articles from this series here.

This was a “Do Your Own Research” Twitter Space Sunday, April 10, 2022 where I did more or less a braindump of all the things I knew about the trial at the time. It should work as a deep dive for people interested in perhaps more detail than I put in my posts, or as a searchable archive.

The TOGETHER Trial list of issues discussed can be found here at ivmmeta.com — the audio will make a lot more sense if you listen to it with that list in front of you.

Charts mentioned:

Transcript

ALEX: So, I don't know if you noticed, but if you've been following my increasingly erratic Tweets, I've been delving into the minds of the creators of the TOGETHER trial and sort of starting to try to understand what is going on with it, I guess. What, the hell happened? I think I'm starting to formulate a clean idea of the events, but I think what has not been done is to get a bunch of folks that have dug into the material to sort of talk through the something like the long list of issues that is presented at ivmetta.com to sort of get a sense of what everybody thinks, right?

ALEX: So I always remember this idea of, like, if only we knew what we knew, you know. Maybe I read something on ivmmeta and I disagree with it, or maybe I have more to say about it, and maybe somebody else has another thing to say, and there's two fragments of ideas that both kind of seem like dead ends to each of us. But if we put them together, maybe there's another idea that starts. So I kinda just wanted to at least say my thoughts out loud. See if anybody else has anything to add to them as I try to sort of build an understanding. I think by this point, I'm getting a fairly solid understanding of the depth and breadth of the trial. At least in the, you know, from, from January to July of 2021. Not the later trials of, you know, did they try afterwards that IFN lambda and that stuff. I haven't dug into that.

ALEX: But, yeah, I just wanted to use this space to just kind of think out loud a little bit, give an opportunity to folks that might have an idea about what more, you know, more material about this to just sort of add to the pile. So we can maybe connect some clues that we might not have done before.

ALEX: So, basically what I'm going to do is literally just go to ivmmeta.com. At the top, they have this TOGETHER trial analysis. I'll add it as a link.

ALEX: Yep. Okay. So you should be seeing now at the top of the space a link that I will be talking through. So, I've invited a number of people to talk. So, I'll just kind of try to walk through some of these issues and sort of just share my thoughts about the particulars.

ALEX: So, you know, up top at the page, there's this statement by Mills in an email to Steve Kirsch and others, which says there's a clear signal that IVM works in COVID patients. That will be significant if more patients were added. It says, if you hear my conversation in some other recorded video, you will hear me retract previous statements where I had been previously negative. And then, in that video, that he's referring to, is that the question of whether this study was stopped too early in light of the political ramifications of needing to demonstrate that the efficacy is really impressive, really could be raised. This guy called Frank Harrell said in that video and I totally agree with Frank. Ed Mills responds, Ed Mills, by the way is kind of the let's call him the mastermind—I don't think he would even disagree with that—behind the TOGETHER trial. He's he's written a lot of the original papers and just kind of in every paper and doing all the communication on behalf of it. He's kind of the guy.

ALEX: Before even we jump in, this thing about whether the trial was stopped too early really bugs me, because there was lack of clarity about how big the trial was supposed to be. They mention 800 patients in some places per arm or 681 in other places. Having dug in deep enough, I'm convinced that they were intending to make it 681 for all the arms, including fluvoxamine and ivermectin that were running in parallel. But here's the, here's the punchline for fluvoxamine, they actually went all the way up to 741 patients. There's no explanation why their data monitoring group didn't stop at 691, as they had said they would. But with ivermectin, they stopped exactly on the limit—actually slightly short—679 patients. Right. So while I don't object that they stopped the trial where they said they would, it is kind of weird that they did not stop the fluvoxamine trial where they said they would. And, and why this matters, right? Like, because we might say like, Hey, a few more patients, what's the problem? If you get to constantly look at the data over and over again as it's coming in, you get to pick a point to stop that looks better for you, right? Every new data point might help or not help. This is why when you're doing AB testing on your website, they tell you very clearly do not stop your trial the moment it looks positive for your, for one or the other, you have to know, like you, you have to kind of have set the size—to pre-commit basically, to the size—so that you don't fall victim to that. Now, there is this things called interim analyses, which like it's kind of a compromise, right? Because they're saying that look, we may have to wait the whole way, but maybe if we check halfway through, that's okay, because if it's going completely sideways, right, there's no reason to continue. And that's fine. However, I even found this analysis that says that the more interim points you add, the more chances of a false positive you get. And I guess the false negative follows from that same analysis.

ALEX: I think they said, in that paper, that with four interim analyses, you get about 10% chance of a false positive, right? Like it's kinda significant. But they did their interim analysis and they reached the completion point. But with fluvoxamine, they didn't even stop there. They continue to an arbitrary point in the future. How do we know that that point was important or not? We don't know, but for ivermectin, somehow it got sharply at 681. In fact, Just for spite… 679, not even 681. So that's kind of weird when he says now like, well, you know, maybe we should have continued it, it's like, well for fluvoxamine you did it. There's no, this doesn't, this isn't like a hypothetical, like they, they did do this. And one of the two kind of twin trials that we're running mostly in parallel. So this requires explanation.

ALEX: Anyway. So, scrolling down, there's kind of a summary here that the paper was updated on April 5, with no indication of explanation. That's true. It goes too deep for me to know if something nefarious happened there, but I just, the basic rational sort of analysis says that they should have declared that they made a correction to the article. Right? If, you've seen this, like when, when they make somebody make a correction, especially on the pro side, they plaster this everywhere, right? Like the news starts. But here it was like, nah, whatever, it was just the typo. Right. It was just going to change it without even mentioning. And they also mentioned that the authors have not responded to the data request. I don't know which requests they're referring to, but I'm sure there's several people who have filed requests. And some of them probably quite, well-credentialed to get the data. But of course, what Mills said to Kirsch in same email above is that they will make it available through ICODA. sounds—it's some kind of “globally coordinated health data led research response to tackle the pandemic”—International COVID-19 Data Alliance. That's it. The problem with ICODA, is it's funded by the same people that are funding this trial and are, you know, are employing Mills and are… Well, they didn't fund this particular arm, but they funded the setup of the trial, to be precise. And are funding Mills and some of the pharmaceutical adjacent companies, that have members of them in the trial are also, members of this organization. Like, let me find these two that Certara and Cytel are two members of—partners of ICODA—right? So, ICODA is not like some, you know, kind of a completely independent organization. It has Bill Gates’ fingerprints all over it as does, you know, Mills and Cytel. And Certara and, um, you know, it's highly conflicted, so it's not, you know, again, ICODA’s being put up front as a, some kind of a, neutral third party that can make decisions. For one, in the fluvoxamine paper, it says clearly that they will give it to a ICODA, but ICODA will ask Mills and Reis—the other author from Brazil, the other co-principal from Brazil—whether the data should be released. And secondly, it's not independent party. So this whole ICODA business feels very much like a indirection because, you know, in the original registration, they said clearly, we will give, you know, anonymized patient data, upon request upon completion of the protocol. The protocol was completed in August, so that's when we should have had this data. Now it’s seven months later, and we're talking about whether at some point in the future—because apparently they're quite busy right now—they might give the data to some party that is not really independent, and then we will be able to apply for access to that data. But of course, that application will go back to two Mills and Reis. Uh, you know, I think this is a little bit of a joke.

ALEX: Anyway, let's get to this, actually, let me check the space and see if anybody's… what’s going on. Oh, we've got Michelle. Hello, Michelle. Approve. Cool.

ALEX: So, again, I'm scrolling and scrolling down the ivmmeta at TOGETHER analysis, which I've linked to the top of the space. You can click there to, to see it. So I've kind of gone through the preamble. I don't know how long the space is going to go for, but I’m have determined to just get everything out of my head as much as I can.

ALEX: So yeah, the first kind of criticism is delayed for more than six months, which I've mentioned. I also wrote a piece, a few months ago, about this delay. There's no real explanation. And even if they, they said, Oh, the journal didn't want to publish it. For fluvoxamine, they published a pre-print on August 23rd. They presented on August 11th, their preliminary results for both ivermectin and fluvoxamine. By the 23rd, that's 12 days, that's not even two weeks. They had pre-print up. Right. They could've put a pre-print up for ivermectin then negotiated with the journal as much as they wanted. They didn't do that. So, you know, that's definitely cause for concern.

ALEX: No response to data requests, as we've talked about. Okay. Uh, the three different death counts. Now this one is one where I get baffled. And honestly, I don't see much depth in this criticism. Like there are different death counts, right? This is a, this is an error in the papers? 1That he didn't know what he was doing because he got his sums wrong in different tables. Well, these guys did this in a few places. But beyond the sort of like, being sort of pleased with the, you know, the schadenfreude, that's the word. Beyond the schadenfreude, I don't fully understand what the implications of this are. Maybe it'll come in handy later, but I'm not a hundred percent sure what to make of it, right? It's true that they present the death as 21 or 20 under ivermectin and 25 or 26 under the placebo, and 24, 25 with the placebo, and it depends how you count them, whether it's all cause or whatever. And it definitely seems like Mills has been inconsistent and how he describes it. There's definitely some messiness going on there. The reason I'm not diving into this too much is that, and maybe this is worse, actually, a quick summary of how I approach this. If you see the list that I ivmmeta has, right. It’s like, we're looking at what, 40 issues? Like some number. In the first phase, I think it was extremely important to start gathering up issues because they're clues. They're telling you that something's gone wrong, but what goes wrong depends on what you, you find in these—what needles are you find in this haystack. Right now, where my mind is at, I've actually got a narrative for what probably went wrong. I've got a story that connects I don't know, 80% of these, together in a way that, that makes it make sense. Because like, if you, if you see 30 problems, in the trial, 40 problems, whatever number there is—by the way, I've debated with myself putting up—I've got more problems than what is here. At this point, I've stopped looking because there's so many. I've debated with myself, whether I should just be posting a problem per day until they release the stupid data. Because there're just so many. Right. But, the number of errors in a way can also act in reverse, right. People who are sort of supportive of the trial will say like, you guys are just throwing whatever you got at the wall, this is a Gish Gallop, whatever. Okay. But, the point here is not to say, okay, look how many, you know, you don't put the claimed errors on a scale and say like, you know, we've got 60 pounds of errors. Therefore you're retracted. I mean, it could be, especially if a lot of these are validated. Sure. But the way I think about it is, it's not this. The way I think about it is, where did these come from? Where does their source, right? Are they 60 independent—40, 30, whatever number—independent errors? That's weird. That's even weirder than having a unified source, right. Having one kind of thing that went wrong and caused all of these ripples. So what I'm looking for is this unifying explanation for all these things. So, the death counts I haven't been able to make it slot in, in a particularly meaningful way to the hypothesis I'm working with. So I haven't dug into this one, but there's definitely something here. It should have been noted when they updated it. And I can't say a lot more than that here. It's just unfortunate that they, seem to be quite flippant with how they are presenting the data, but also are happy to just make changes willy nilly.

ALEX: Let's move on to the next one. So the trial was not blind. This is an interesting, accusation because of course, you know, a double-blinded, randomized, controlled trial. I mean, these are the things you've got, right? You got a blind, randomized, and controlled. If you, start eating away at those, you’ve got a real issue with the trial.

ALEX: So, this one says, ivermectin placebo blinding was done by assigning a letter to each group, that was only known to the pharmacist. If a patient received the 3-dose treatment investigators immediately know that the patient is more likely to be into treatment group than the control group. Yes. So, here's what this means. I’ll just explain it. Let's forget everything else about the various allocations. The baseline allocation for most of the trial was that they had three medicines that were testing. It was fluvoxamine, metformin, which was stopped at one point, and ivermectin. And then there was a placebo arm. And the algorithm was allocating between these arms. However, within the placebo arm, because these other treatments have different durations, metformin was actually also 10-day, as was fluvoxamine—a 10-day, morning and night, actually, likes a 20 pills to take basically. Whereas the ivermectin was, at least after it got restarted, it was a 3-dose trial. So, what do you do in this case? Right? How do you, cover this divide? Well, when the trial started, if you look at the original protocol, dated December 17th, what they were going to do is give everybody 20 pills, right? Ten days, morning and night, you get 20 pills. If you were on ivermectin, the three morning pills, right? Morning one, morning two, morning three, were going to be ivermectin and you were going to get 17 placebos anyway. If you were on the others, and you were on the treatment arm, you would get 20 real pills. I'm not sure if metformin was it in fact 20 pills or there was some filling in with placebo, but you get, you get the point. However, when they restarted on high-dose ivermectin, they actually changed how they approached that. And they said We’re going to create placebo that is 3-day and 10-day. So some of the placebo patients are going to be getting three days of placebo. Some of the placebo patients are going to be getting three days of placebo, some of the placebo patients are going to be getting ten days of placebo. And, you know, one of the arms is going to be taking three days of treatment. And another one of the arms is going to be taking 10 days of treatment twice a day, for fluvoxamine. And, again, whatever it is that they did for it metformin. I haven't dug into that one as much. Now here's the problem with that, right? So let's say you've got 200 patients, and you are allocating them across four arms. So, you got 50 on ivermectin—high-dose ivermectin, right?—we're talking about the phase after March 23rd, when they were allocating to the high-dose ivermectin arm. Let's say you put 50 on high-dose ivermectin, 50 on fluvoxamine, 50 on metformin, and 50 in placebo. And within the placebo, you split them, right? You either do half, half, 3-day and 10-day, or maybe you do 2/3 10-day because metformin and fluvoxamine are both a 10-day. And 1/3. Let's say you do it half. So, then you've got 25 patients within the 50 placebo that are getting 3-day placebo and 25 that are getting 10-day placebo to match sort of the metformin/fluvoxamine regimens. Now the problem with that, is that if you gave a letter to the 3-dose placebo patients, the 3-dose treatment, whatever treatment, whether it was placebo or ivermectin, that you had the 3-dose group. And that group had a letter. Now, if I see what patient has that letter—let's say, I don't know, B—on their grouping that was supposed to be blind, right? You don't know what that is. But in fact, I do have information. I do know that there are 25 placebo patients and 50 treatment patients, which means that patient, two thirds chance, this person is an ivermectin treatment patient. Right. It's not, you know, completely blind that this was already, violating the blinding of the trial. Because, while I'm not completely sure if a specific patient I'm looking at is taking ivermectin, I am 66% sure. And if I want to sabotage them, I could totally do that.

ALEX: I don't think that happened with the data I saw from the protocol analysis. But that doesn't, you know, in a way, what I think shouldn't matter, right? The point is did they blind appropriately or not? And it does seem that there was, this feature of the revised protocol in March 23rd. I think if you go on the page, togethertrial.com/protocols, this would be version 2.0, which funnily has a parenthesis next to it, which says “first version.” So it's kinda confusing, but, um, that one. It talks about these splits in the placebo group. Anyway. So, I do agree that the trial was not blind or at least its blinding was compromised. Right. And he said that “note that we only know about this blinding failure, because the journal required the authors to restrict to the 3-day placebo group. Also note that this may apply to all arms of the TOGETHER trial and that it would have been trivial to avoid if desired.” This is a very important point.

ALEX: Some of the delay has been attributed by Mills to the journal. And what this means academically, is that there was a lot of back and forth and a lot of corrections and a lot of insistence, and quite a bit of unhappiness, I suppose, on the part of the journal, uh, about what they were looking at. So they forced them to make a number of changes to their manuscript. One of the changes that Mills, attributes to the journal, in his email, I believe to Kirsch, or maybe he did this in the talk he gave afterwards, was this allocation of the 3-day placebo because the per-protocol placebo was not described the same way for fluvoxamine. For fluvoxamine, they just said, you know, any placebo patients that follow the protocol—that includes 3-day placebo. Whereas for ivermectin, they said only 3-day placebo patients. But Mills says the journal made them do this. The problem with that is that this revealed this feature of the trial. Like until then, for the metformin papers and for the fluvoxamine paper, we didn't know that that was happening. Now, truth be told, it's in the protocol, right? If you read in detail, you'll see it. But the problem with this trial is that, if you gather all the materials that, you know, I've gone through, and some others have gone through to, understand what's going on, we're talking about like 20 thick documents, like thousands of pages, right? Like they could have made this a lot cleaner than they did, is the long story short.

MICHELLE: Alex, when you say trivial to avoid, I dunno if my interpretation was just like, they didn't have to structure the placebo that way in the first place. Like if they had just used the original protocol.

ALEX: Correct.

MICHELLE: Very unclear why they didn't do that.

ALEX: One interesting thing about this in the, in the review on the Gates Open Science, I believe, Gates Open Research portal, there's this open peer review, which Mills responded to. So that was kind of an interesting document to look into. One of the people who was very critical of their, you know, not having their committee, independent, actually praised them for, for doing this. Right. But praised them in an interesting way. He said, this is a very innovative, approach to the problem. Actually, I'm not sure if it was the same reviewer, maybe it was the other one, but you know, this actually says something. Like that this is special. This is not a normal feature of these kinds of trials. Even among the adaptive trials, this is not normal. But maybe there is some talk in the background. Like we could find research that would sort of present this as an improvement on the placebo procedure. However, that doesn't mean that the reviewer understood fully what was happening. Right. And that was a good idea. All we know is that this is a feature of the trial that is novel, and you know, naively, it sounds to us like it, effected the blinding, if it, if not, it should be explained somehow somewhere why that was not the case.

MICHELLE: Well, and you also lose, like, it doesn't make sense because the whole point is to share placebo patients across all the arms. And if you start giving them different doses, now you can't share them. So you've reduced… like it just nothing about it makes sense to me.

ALEX: They do share it. They do share the patients only in the per-protocol analysis, you don’t. And only the ivermectin paper this has done. And I think at the insistence of the journal. So they, their plan was not to do it this way.

MICHELLE: Yeah. But I, I dunno, it's not clear why they wouldn't just give everybody the 10 pills and then divided out like, oh, well only one of those is an active pill. Like it just then it’s more even for everybody and nobody loses. There's, there's nothing (inaudible). So.

ALEX: No, and this is actually one of the reasons I'm curious to see the early data. Right? One of the things that didn't happen—I guess they'll say it later—is that they, they didn't release—and I realize that you need to kind of a visual map of the trial to understand what I'm talking about—but they had the low-dose ivermectin arm in the beginning, from I believe, January 20th to somewhere around March 4th. They don't tell us when they stopped, exactly. They had the that arm going, and that was with the full sort of 20-dose placebo and even the ivermectin arm was 20 doses, et cetera, et cetera, even though the three were active. That data is not released. They have that data somewhere. They have not released it and they have not even told us—which is another interesting feature here—why they did this. Like they haven't told us, basically, what was the decision maybe from the data and safety monitoring committee, about resetting the dose? Like when was that taken? Because they seem to have a protocol, like three weeks in, with only 19 patients, recruited. They had protocol ready to go, to revise the trial. Did the committee come together at like five patients and say, we don't like this? If they could do it at five wouldn't they do it at the beginning? It's really strange at that reset happened. They stopped that and they haven't given us that data, which I think would elucidate a ton.

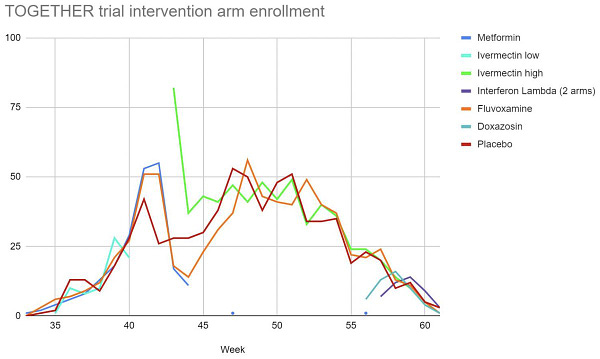

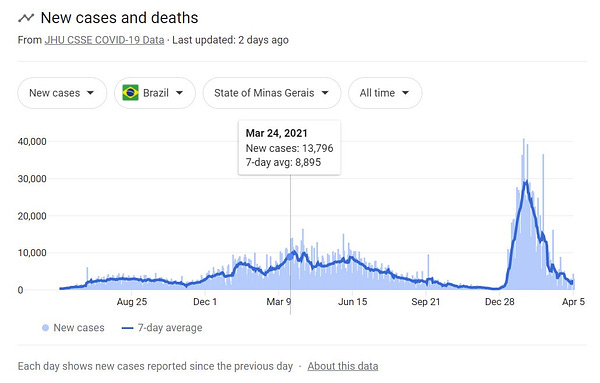

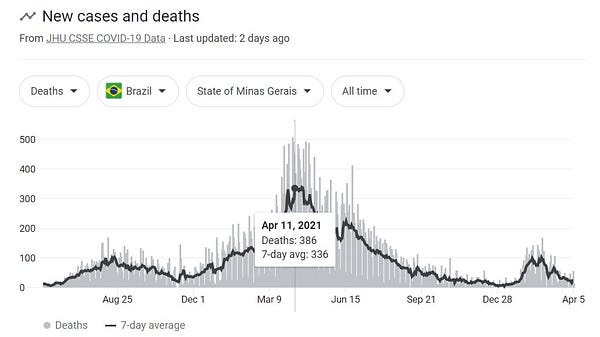

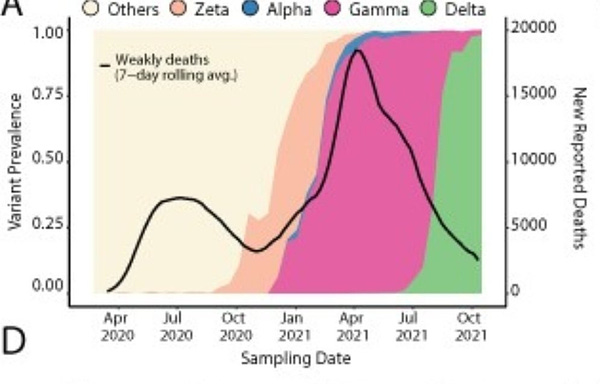

ALEX: Anyway, so the next one is patient counts did not match previously released enrollment graph. So, if you've seen my, on my Substack, doyourownresearch.substack.com, I've got, my first piece on TOGETHER, which is kind of like just getting some ideas out there. And I've shown how we did reverse engineering of the released graph. So they released this—I dunno what to call it—like the stack chart of enrollments, which was kind of really beautiful, but, that didn't release the data with it. But it turns out it's quite easy to reverse engineer. So, I mean, easy in terms of—it's not simple to do—but it's not, it's not very complicated to do. You just apply a grid and you can count. And what we saw there is that, the numbers they claim they had only match if they have taken placebo patients from the interim between the low-dose and high-dose ivermectin arms. So, basically what happened is they had the low-dose ivermectin trial, which went on for, I believe something like six weeks. Then they paused for two weeks. And then they started again on the high-dose. Right? In the meantime, the placebo arm was ongoing. The fluvoxamine arm was ongoing in. and the metformin arm was ongoing. So only on the ivermectin side did you have this gap. And what I've seen of the numbers, everything I add together says the same story: that the patients from those two weeks of placebo after the low-dose IVM arm was stopped, were used as placebo for the high-dose ivermectin trial. So they were offset by two weeks, actually a little bit more than two weeks. Thet were offset into this trial. Now, why is this a problem, right? They just placebo patients, you know, surely… It gets messier, but we'll talk about it later. When you start to realize that the Gamma variant was—like those weeks before and after, like right before the high-dose arm started and right after—were literally the worst days of the pandemic for Brazil. They were not just kind of any day, they were the absolute worst. They had a number of deaths that was like twice daily than any other peak that they had. I'm getting images of like, you know, Italy early on the pandemic, like just absolute chaos. Right. So it's conspicuous that, that weird sort of dislocation of patients is happening exactly as that wave is first building and then sort of cresting on Brazil and specifically in Minas Gerais that, uh, uh, state and for anybody who was from Brazil, I apologize, please don't kill me for butchering that pronunciation. I'm doing my best.

ALEX: Okay, so, funding conflict. So, I want to say a little bit about funding. What they're saying here is very narrow. They’re saying that the original protocol said that the trial was funded by Bill and Melinda Gates Foundation. Whereas the later protocols do not mention this. What I understand happened is Bill and Melinda Gates funded the, the framework, the setup of the trial, right? If it had an office of had like some, you know, some materials to buy, computers, whatever they needed, and you know, to make submissions by domain names, whatever it is that you need, like the sort of the infrastructure was funded by the Gates Foundation. But, the Gates Foundation did not fund this particular trial. Now they put that in there—maybe they thought that the Gates Foundation would fund it and they backed out whatever it is, in later protocols and every paper since then, it's very consistent that the funding has come from the Rainwater Foundation and the Fast Grants Foundation. And you know, of all I know of these people that funded those trials, they're good people. Like I know it sounds weird, at least for Fast Grants that I have direct knowledge and some knowledge, and very, vague I have of the Rainwater people. They seem like they're coming from a good place. But, you know, again, this later we'll get into the conflict of interest stuff and, I'll mention why I don't make a big fuss about that. But I'm here, this funding conflict element, that they're mentioning is just this, that they initially said that it was funded by Gates Foundation, and then they disappeared and this has not been explained. And, and I think like a lot of these things, some of these are understandable. Like you could sort of imagine what happened, right? Like they, kind of had some early conversations and said, yeah, yeah, sure. We'll fund it. And then they went back and they're like, no, I don't think we can do it or whatever. Right. And, you could see these artifacts show up. But a little bit of explanation would go a long way. Like this is something they could have said, like, look, yeah, this is, you know, now we're just stuck kind of saying like, are they hiding something? Or like, whatever. Because again, we're being forced to look through 20 different documents. There isn't like one write-up that just makes everything plain. That's with the funding conflict.

ALEX: Now the DSMC not independent, that's where things start getting really hairy. I've I've written about this. I think I was maybe—was I the person who first picked this up? I'm not a hundred percent sure. But I definitely was among the folks that raised it. And I, and I did more work on this one, so I know, I know a lot to say. So the issue with this is that, this guy, Kristian Thorlund, is the chairman of the Data and Safety Monitoring Committee. Now, this is fine. Right. He’s a professor, whatever you have a committee, keep in mind that the chairman both controls how the meetings flow and, has a special tiebreaker vote. Usually—and I know this from board meetings—right? I don't know if this particular committee has that particular structure, but that's usually what a chairman, is a way that that's their position is kind of special. Now, the problem with this is that Thorlund is not just Mills friend or Mills like acquaintance or Mills coworker at both Cytel and McMaster university. All these things are true, probably, but it's just the beginning of the issues. The problem is that Thorlund and Mills, co-founded company called MTEK sciences, which was the email address to which the TOGETHER trial was going. If you go to the togethertrial.com and the internet archive, and go back to the first version, the place they send you to ask for inquiries is info at MTEK sciences.com, I believe. And if you look at the FAQ, it says that the co-principal investigators are Mills and Thorlund. So Thorlund is not like some independent third-party person who is just kind of like wading in, or like they brought him into to provide some, you know, independent guidance. He is deeply deeply involved with the design of this trial. And in fact, if you, go further back in their shared literature, Mills and Thorlund, by the way, have written over 100 papers together. I believe it was 101 to be precise, but like just an absurd number of papers that they're cited together. They have a very deep and long collaboration. Some of those papers are about this kind of adaptive trials, and during MTEK, one of their papers, is about, High Efficiency Clinical Trials—HECT. And they also named another person. I believe the name is Jonas Häggström from who works at MTEK. And he's also at this supposedly independent, supposedly unconnected, Data and Safety Monitoring Committee. Right. So you've got, you've now got two people in this committee of, I believe five, who are deeply connected to Mills and to Cytel and to MTEK. And Häggström was working before he was working at MTEK, was working at, you guessed it, the Bill and Melinda Gates Foundation. This isn't what you do if you want a committee here that is controlling, by the way, when things begin and end. And when, you know, when a trial has completed, when there's futility, all these decisions that are attributed to this quote, unquote independent, Data and Safety Monitoring Committee, right? And this committee has one person who is, you know, as deep into it as Mills and one person who worked for them at MTEK followed them at Cytel, and, you know, his career is basically like, very, very closely linked to theirs. This is not what independence looks like.

MICHELLE: So the other thing that rubs me the wrong way on that, and I don't know if it's like, standard is that they are not included on the publication. So they're not listed in the conflict of interest. So you don't know about them unless you dig them up. I don't know if this is, did you, would you confirm, like, would you agree with that?

ALEX: That it should have been done?

MICHELLE: Well that, like all the other, all the authors on the paper, they have to list their conflicts of interest. So you can at least see like, oh, you work, you're funded separately by Bill and Melinda Gates Foundation. Right. For those guys, the DSMC, they are not listed on there. So you don't know what their conflicts of interest are.

ALEX: Well, what I would say is that they should be beyond reproach so that if you look at them, there should be nothing to find. So that's fine, that they're not listed.

MICHELLE: I guess.

ALEX: The problem is that, that there is all these, like if you have a DSMC right, and you’re having to list like a long list of like conflicts of interest. Like, you know, just forget about it! Just see, like, look, we got together with our buddies, decided whatever we wanted to do, and we just did it. And that's what we did. Okay. Just be honest. Like that’s, you know, at least say that, like, don't tell me that, like, you know, by the way, just to mention something that people might not understand and I know from my, from the startup world, MTEK was acquired by Cytel in 2019, just a very, very short amount of time before this trial. Right. It very well might be, it's actually quite common for startups when you're required to have performance targets, all of the technology or the products that you sell to your acquirer. So I don't know this for a fact, but I would definitely would not rule it out (inaudible) could have not just you know, Cytel stock, right. That would go up if the trial goes well, or whatever. You could have an explicit target on this contract that this, you know, that this trial should succeed. And again, I don't know this, but I, I can hypothesize that it's very normal and I shouldn't have to ask this question is what I'm saying. Yeah, as could Mills. Okay, fine. He’s just doing his job, right. It's fine for Mills to want to succeed. But for the DSMC, ideally they should be neutral and, and sort of, you know, uninterested in the result of the trial. What they should want is they call it a Data and safety monitoring committee. What they should want is that the data and the safety of the patients is being done at the highest standard, and that's it. Whether it comes back positive or negative, they should not care. And neither Mills nor Häggström, I believe can say that. And of course, there's other people in there that have written many papers, like Sonal Signh has written 26 papers with Mills. I mean, I just don't, don't get it, like on some level I'm like, this is just too much, like, just find some random people like they could agree with you, but like, they don't have to have like many years of shared academic, like career with them. Like if possible, like it's, I don't know. It just baffles me that it's like this blatant. Yeah. Anyway, so that's the DSMC not independent piece. And this is where things start to gel, right because you're like, okay, so the people who were making decisions, what to start, what to stop are not independent. The starting and stopping appears to be kind of odd. You know, and we can't rely on the DSMC to have done this for it. Like we can't trust them to have done this correctly. What's worse is that this was noted in the open peer review at gatesopenresearch dot whatever. And Mills responded and said, you know what, that's a good point. I'm going to take Thorland’s vote away in that committee. First of all, this was in August. So all of this, whatever we're talking about now is gone. And secondly, the reviewer comes back and says, well, if you're not going to remove him from the committee, because that's what I asked you to do, right. He's still chairman, he still runs the agenda. He's still, possibly has a tiebreaker vote and he's definitely in the room. Right? So he could, you know, intimidate others, whatever, like you don't know what those conversations are like. Again, we don't have minutes, we don’t have anything. So that reviewer, of the two actually withheld his full approval. Like if you go there, this trial has one approval from one reviewer and another one, which is, I don't know how they call it with, with reservations or something like that, but it's like, it's not a green tick, it's a green question mark. Right? So for this topic specifically, this reviewer was like, yeah, sorry, man. Like this isn't this isn't okay. And you shouldn't do this and still again, the question is like, why didn't Mills just say like, you know, that's a good point. As the reviewer said, if they wanted to, you know, consult, a statistician, like, like Thorland or whatever, they could call him into the meeting when they want to and tell him to go away, but they need to be able to talk alone. And this was not possible with the, this, this arrangement of the, of the committee. And then we get into the unequal randomization. By the way, if anybody wants to talk, feel free to ask for the mic and just bring your wisdom to the group because I'm. Yeah. Part of this is just to make a document that people who are this interested that can listen to. And part of this is to, get my thoughts out as well.

ALEX: Anyway, unequal randomization, significant confounding (inaudible). The trial reports, 1. 1. 1. 1. randomization, however independent analysis shows that much higher enrollment in the ivermectin treatment arms towards the start of the trial. Right? So this is another version of the problem we talked about before. So by my research, they had 75 placebo patients from earlier. Now, what happens if you're an algorithm that's allocating a block randomized, stratified by age and site, patients to these different arms… So what I hypothesize happened, right, and I might not know what I'm talking about when it comes to biology, but this is computer science. So, uh, kindly, uh, yeah, uh, this is my, this is my area, actually. What it feels like happened is the ivermectin arm was suspended for two weeks plus, you know, a bit longer maybe, which means that the algorithm was making three arm blocks. It was making blocks, of patients that were to be allocated to metformin, fluvoxamine, and placebo, and again, stratified by age and sites. So, you know, this had to have enough patients from the two age cohorts above 50 and below 50. But the IVM arm was suspended, right? So the blocks it made were shorter. There were smaller. Now, the IVM of arm appears again on the horizon on March 23rd. So what I think happened, and you can see the graph in the website, but it doesn't tell the story. What I think happened is the algorithm realized, that the arm it thought had gone away, had not gone away. And therefore that had a bunch of blocks that were under-allocated. So what it did is it started taking every patient that came in and basically adding them to the previously, uh, you know, backfilling, the blocks that it had created in the previous two weeks with an additional patient. And that patient was always, or almost always right, like 75% of the time by the looks of it, a IVM treatment patient. Now this has two problems. Remember what we've said about the 3-dose thing before? Right. So if you see a patient with 3-doses, whatever. Here, it's even more blatant, because like, well, this particular week, 75% of the patients that are coming in IVM treatment. Like, you know by the date, and you know by the letter. And also, that particular week was terrible for Brazil. It's one of the worst weeks—rather probably the worst week, but in absolute terms—especially in Minas Gerais, that, that, that, that, state, of the whole pandemic. Right. So coincidence, I don't know, like, you know, it looks awfully odd, but, regardless of intent, the point is that this, this matters a lot because you get these patients that have a super high case fatality rate and you disproportionately allocate them to the ivermectin arm, and the placebo arm was allocated before, right? Like this is a complete and total violation of the, uh, structure of, of a, of a clinical trial, right. Uh, if you can do this, right, and, and it all comes back to the decisions by the this committee. They decide when to start things and they decide when to stop things. Right. So if they're independent, you could say, look that the designers of the trial did not know anything about this, but since they are most certainly not independent, it all now congeals. And you're like, they're real questions of have to ask. Did they know about this? Was this coordinated in some way? They were on the ground in Brazil, they were doing clinical trial. They were getting data. They were basically, able to have a real-time, dashboard, not generally from Brazil, but specifically from their patients about how the case fatality rate was evolving exactly in the places that they were. The opportunity was there. The motive we can debate, but, uh, you know, they have way too many knobs basically. What's showing up is that they have way too many knobs in their hands and the results look like those knobs were tweaked for whatever reason, intentionally or not in a very specific way.

ALEX: By the way, there's another, implication from this. And I resisted this implication for quite a while, but now I'm starting to get more and more convinced about it, which is that not only did this perturbation that was introduced, make the ivermectin paper look worse, it made the fluvoxamine paper look better. And actually just as a short, aside, the fluvoxamine paper is fucking weird. Like they said that the fluvoxamine showed like a 30% improvement in, was it mortality or hospitalization? I'm not sure, but the headline number was like 31% improvement. if you take all the patients. But then they have the secondary thing, it was just like, if you take only per-protocol numbers, it was 91% effective. 91, like 30% or 91%? These are, these numbers are so different as to be in different universes. And many people were describing these results as like, wow. Fluvoxamine is even better than we thought. And I have to say, like, I, I took that conclusion as well, but then I was like, 91%, like this, basically made the coronavirus go away. Like, no matter what you had, who you were, we're talking about high BMI patients with comorbidities here. Right. We're talking about people, some of them like with, you know, recent cancer we’re talking about kidney problems and several days into the disease. And taking fluvoxamine apparently, 91% of them did not hit the (inaudible). Like it's just too much people should have, like myself included should have stood up and paid attention at that point when we learned that number in September, I think, because it's just, it's just like outrageous. And especially the difference between 30 and 90 is, is absurd. Like this is not, you know, when you do per-protocol, right. What does, what does per-protocol mean, except for what it means in this trial, which is another story, but in general? It means that you don't just look at all the patients, you look at the patients who you made sure took all the medication. And remember the fluvoxamine was given as 20 doses over 10 days. So it's quite a complicated regimen. Right. But at the same time, when they said per-protocol in that paper, they meant 80% adherence. Right. So you had to have taken at least 16 of the 20 doses. So it wasn't like full full adherence, it was just like mostly adherence. And so you kind of see why they would have some drop-off and it did have some drops-off. Some patients did not, even on the placebo or the treatment arm go the whole way. It's fine. But that still doesn't explain, you know, going from 30 to 91, like, that's just, it's just, kind of a whole other, a whole other level.

ALEX: Anyway. Yeah. That's, that's just something that, I don't actually know what happened with the fluvoxamine paper. As I mentioned, it looks to me like there was benefit moved because of the of the stratification and, and randomization, issue here with offsetting the placebo patients, it looks like benefit was moved from ivermectin and towards the placebo end of fluvoxamine arms. And, you know, I know that that's sounds like a huge accusation, but I mean, you know, math is math. I don't, I don't know what to do. Just looking at it and saying what I see. I really did not expect that this would be, uh, showing up.

ALEX: Next one, another mystery missing time from onset patients shows that distinctly significant efficacy. So this was, Michelle's sort of, I think, big, big finding, at least one of them.Do you wanna, do you wanna talk, talk, talk, talk this through.

MICHELLE: Me? Yeah. sorry, I'm just approving here. Mathew just jumped on too. Let's see here…

ALEX: It says missing time from onset patients show statistically significant efficacy, you know, there's 317 ghost group.

MICHELLE: Yeah. So I don't have the numbers on my fingertips, but they basically had the full number of patients in placebo patients. And then they broke out, the per-protocol patients, which I guess are people who had either only the three placebo doses and also a hundred percent adherence, whatever that means. I guess they polled them to make sure they actually followed through with their dosing.

ALEX: And this is for the time, time from onset analysis, uh, where they three days before I don't, I don't think this applies to the per-protocol thing. Does it?

MICHELLE: Yeah. That's the whole reason it's they’re missing data.

ALEX: Really? OK. So go ahead.

MICHELLE: Yeah. So there, so they took on the per-protocol patients. I should just pull up the paper.

ALEX: This, this might be, this is interesting. Actually, we may have a different understanding of this, which is this, this is exactly the way to have this conversation. Go ahead.

MICHELLE: Um, so, but for whatever reason they are missing a lot of placebo patients in the subgroup analysis for the time to symptom onset, where they break out, basically, it's like, you want to know, did it make a difference if you were in the zero to three day group or the four to seven day group? And for all the other categories, they have a lot more patients included, so they have a few missing, but they don't have as many missing. And this one they're missing like a third or more of the patients and uh, oh yeah, you're right. I'm mixing up the per-protocol thing. Um, so the thing that was interesting though, is because the overall effect of ivermectin was like 0.9, uh, the relative risk, which is like a 10% effectiveness, but not, I don't know this is the wrong way to say it, but it's not statistically significant that the confidence interval is too wide, right. Based on their frequentist statistics. So you see an effect, but it's not within the statistical range. So for all the other things you would expect, if, you know, if you had a 0.9 average, then you know, if you're looking at age like older people might be affected more, younger people might be affected less, but on average, we're still getting that 0.9 effect. For the time to onset, the average was really high. It was over 1. So it was like whatever patients were missing there that weren't included in that data, had an overwhelmingly positive effect and they calculated it out. I don't know who did that, but it was on ivmmeta…

ALEX: Yeah, I’ve got the numbers here if you want.

MICHELLE: It's 0.5, right?

ALEX: Yeah. It says a relative risk point 51 P equals 002.

MICHELLE: Yeah. So like, if you back calculate the missing patients, it's extremely positive for ivermectin. So it's just this question of like, why are those patients missing and why are they so positive? Is it just random chance or what? And it's still an unsolved mystery in my mind.

ALEX: Yeah, no, we don't. We don't really know. I think when we find that, whatever it is that happened, this would also, slot in there somehow. But again, like the, the deaths that are the, the sums that are like, you know, plus or minus, this one is also like a mystery that is related to the whole chaos, but I don't know, I can't connect it directly.

ALEX: Hey Mathew.

MATHEW: Hey, Alex.

ALEX: How's it going? Uh, you you're you're you're you're following along the, uh, the gradual, you know, loss of sanity that I'm undergoing.

MATHEW: Yeah. A little bit late. And, um, I have not done the deep dive that you have on this paper, but I was listening just now, and I thought, you know, we need some kind of a terminology that describes a minimum standard of evidence, meaning like, you know, we're talking about patients missing, right. And of course, you know, this was true in like the Pfizer vaccine trial, you know, the, the first Pfizer vaccine trial report comes out and there are all these exclusions and that that's frustrated the hell out of me ever since that report came out. And it's not the only trial that I've seen that in during the pandemic.

ALEX: Right.

MATHEW: But in particular, it's always trials that I feel like, you know, the worst about, you know, like, I wouldn't even want to talk about it unless I did a deep dive. Right, right. Like that's the way you feel. Every time you see that. There should be a name for this, there should be a name for like, there should be a minimum standard. There should be a set of things that is included in any trial before you even consider it complete. Before you consider a minimum standard of evidence. And this just should be one of those things that should at least be explained to a minimal degree before you call it a minimal standard of evidence.

MICHELLE: It's crazy because I think most people like maybe the mainstream researchers, they assume that if something's published in the Lancet, NEJM JAMA that it has passed those hurdles. So they just take the abstract at face value. But that's obviously not the case.

MATHEW: Yeah. Type equals equals while running clinical trials.

ALEX: Yeah. No, it's um, the interesting thing here, Mathew, which I don't know if you've come across these adaptive trials, but, I am just deeply conflicted, right? Because I actually quite love the design and the statistics they're using. It's cleaner. It's, you know, the Bayesian stats and stuff is beautiful. That's awesome. But like everything new, right? It doesn't have the safeguards that you would need, to be trustworthy. And not only that, so it kind of presents a bunch of knobs to manipulate things. Right. This thing I mentioned before, moving benefit from ivermectin to fluvoxamine in a standard randomized trial, you don't have that opportunity. You don't have multiple arms to play with.

MICHELLE: If they had followed the protocol, I don't think they would have had these problems. Um, ‘cause even in a, even in a regular RCT, if you front-loaded placebo and then back-loaded your patients, that would mess up the randomization. You have to,

ALEX: Well, these are the consecutive trials, right? That this is what Merrick did. Not, not in a bad way. Like he actually called it out. He was like, this is what I'm doing. I'm going to take the first six months patients I'm going to put them here. Then the second six months, I'm going to put them there. That's a different kind of trial and you analyze it differently and you expect different statistical patterns, which Mathew had demonstrated. Why people, you know, got confused.

MICHELLE: If these aren't purposeful mistakes and they're just things that happen because it's, you know, chaotic, they have to put them in the discussion and say, Hey, you know, there is this offset and Hey, we do know that there's this variant and we're going to do our best to like, at least call it out. And they didn't do any of that. They didn't point out any of their mistakes. It's all hidden.

ALEX: Yep. Next! Side effect profile consistent with many treatment patients not receiving authentic ivermectin and/or control patients receiving ivermectin. So this one, this argument, I'm, I'm, I'm not too hot about like, I get it, but also like, I don't know. And this kind of goes to the whole exclusion/inclusion vortex, which is another, sort of set of facts there that is murky. So what they're saying is basically like, if you look at diarrhea for instance, right? Like it's it's what does it say that it's actually lower in the treatment arm. There is like a well-known side effect of ivermectin. So they're like, well, How could this be? Right. And there's a similar pattern in the Lopez Medina paper where blurred vision is one of the ivermectin tells. And it was like, again, quite high in the, in the placebo group. And they're like what everybody in Columbia is seeing blurred, like now, like, like what's the deal. So I get it and it's kind of like an indication, but on its own, this wouldn't prove anything. Right. You could be like, well, you know, these people are taking it and making it forever. So they're adapted to it. There's all sorts of explanations you can come up with. And it has some value to me as a, like, adding up to all the other ones. But on its own, I don't see it as like an extremely, you know, like if this is the only thing you had, you wouldn't say like, you know, that's it, you know, we caught them or something. This is just, it's like a hint, maybe that something's going sideways, but you don't really know what to make of it without more information as is this thing—a local Brazilian investigator reports that at the time of the trial, there was only one likely placebo manufacturer, and they reportedly did not receive a request to produce identical placebo tablets. They also report that compounded ivermectin in Brazil is considered unreliable. So this is kind of, I think this might be Flavio Cadegiani. I'm not sure where this is coming from again. Interesting. If we had that sort of, written up properly from, you know, the email from the manufacturer, et cetera, et cetera. That's the kind of stuff that was used to take down Carvallo. So it has to count like an important thing. If a local placebo manufacturer was not contacted and there's only one, and they say, no, we have no idea how they made it a placebos, then, you know, okay maybe they imported them. But that has to now be explained.

ALEX: Incorrect inclusion. This is one of my favorite ones, because I think ivmmeta is correct at bringing it up, but incorrect in how they describe it. Or at least partially incorrect in how to describe it. So they say, you know, the conclusion states that ivermectin did not result in a lower incidence of hospitalization or ER observation, over six hours, this is incorrect. Hospitalization was 17% lower. It's just not statistically significant. Now, first of all, the paper does not mention statistical significance anywhere. I challenge you to open it up and you do a search and say, look for statistically significant. These words does not occur. The words P value do not occur anywhere in the paper. It is full Bayesian statistics. Right. So we don’t actually know, if it was statistically significant strictly speaking, we don't actually know if it was statistically significant. We haven't seen those numbers. What we've seen is Bayesian numbers and the Bayesian numbers tell us that it did not reach the 95% confidence interval, but the interpretation of that, and—and I'm sure Mathew can tell us a lot more about this—is not the same as the frequentist intervals. And that's why I shared this paper before, this article before about, credible intervals, which is kind of the Bayesian equivalent. The reason I have that article is because with Bayesian stats, which is, I dunno, a lot, a lot of people are saying are better. Uh, but, uh, whatever. So let me put it the other way with frequentist statistics, right? If you have an interval, you can make no statements basically about where the value is within that interval. Right? So, so that's why they say if it crosses the one line, if there's a chance it could be negative, it could be actually harmful. That is, then you really have to stay away because you can make no statements, even if it's like a little bit, right. You can make no statements about where the actual weight of the evidence is. It could actually be on the negative side and you really should not try to parse that interval. With Bayesian stats, that's not the case. You see a bell curve, and that bell curve tells you where the probability is. So the closer you get to the middle, or wherever the top of the, of the bell is, the more likely you are to get you, can't say that with Bayesian statistics, right? So when they say, basically this is the thing that's just drives me insane. If you go to the supplemental appendix and look at figure, I believe it's S2, like I'm talking about like, it's a reduced—it’s sent to the back of the library, right? You will see this now these patient stats, right. And they do say that if we take all the patients that we had intention to treat, ivermectin comes out 81.4% probability of superior. If we take modified intention to treat, which means that the patient triggered an event before 24 hours of being randomized. I don't know if it comes ahead, 79.4%, probability of superiority, right? And then if we take the per-protocol numbers, which is what Michelle was talking about before, only the 3-day patients, basically, ivermectin comes out ahead like 64% of the time. Let's leave the per-protocol thing out because it's a mess and we don't really understand how that works. But the other ones are like, you know, roughly 80% chance that ivermectin is superior. Now this is not, what did they say here? How did they write this conclusion? Did not result in lower incidents, right? Like you, not only do you not have this confidence to say black and white, you have confidence to say something positive. Like if I tell you here's the treatment, it might help you, but only four out of five times. Right. Like, it's like a, you know, 80% chance it helps, or it might not help. Would you say that, Well, since, I don't know about the fifth time, then there's no indication it helps? Like this, this is the statements they went, they went and made to the press. They said, no indication of clinical usefulness. Like, no, it's not even a hint. It's like, okay, all right, I get it. But the numbers are showing different things, right. And again, because it's Bayesian stats, we don't have to be limited by the classical frameworks that sort of put extremely tight, sort of limits on how you're allowed to interpret things. They are far more intuitive than what you would normally think these intervals mean. A lot of people mistakenly think that, the, the, the, confidence intervals mean what the Bayesian intervals actually mean, because that's the intuitive explanation.

ALEX: Anyway. Yeah. So, the conclusion is definitely correct. Yeah. Go ahead. Go ahead Matt.

MATHEW: Yeah. If I could jump in here. So I'm, I'm kind of an applied statistician. We did lots of, you know, Bayesian analysis when I was on Wall Street. If we were modeling something and we were kind of interested in, you know, curious about whether or not the, the, the variable had an effect on a system in my experience, almost all of these probabilities are pushed away from extremes in practice when you're doing Bayesian analysis. In other words, if you get a probability of 12, it's probably more likely closer to zero. If you get a probability of 80, it's probably more likely closer to 1, when you begin running your machine and practicing. And, and it's one of those things where where, you know, there's no mathematics that justifies anything like that and, and really, and truly, you know, this isn't even the way statistics is supposed to be used, right? Like biomedical applications are, are just, you know, they're, they're tenuous at best because you know, the statistics are not, as designed to give you a real number as it's made out to be. I mean, like, what does 80% mean anyway? Like, are we in like a quantum state or something? Right. So really truly, it's more up to judgment than anything, but that's my, that's my experience with applied statistics.

ALEX: Yeah. Yeah. And of course, it's, you know, it's 80% if you're facing the Gamma variant, if you're, uh, you know, so many days after symptoms, if you, yada, yada yada, right. Like, okay, well, how you take that and make a statement about the broader world is another issue, probably part of what you're, you're pointing at. So I don't know if you've seen this, Matt, but I've, I've not put it at the top of the space. There, this figure. If you haven't seen it before, you'll freak out because this is in the appendix and they literally like, just spell it out that it is like, you know, 80, 80% ish, probability of superiority, but in the conclusion of the main paper and the press, they say the exact opposite, so when I saw this, I was just like, I was baffled. And by the way, this figure is significant for another reason. Because they do a lot of Bayesian stats, in the TOGETHER trial in general, and they're and they're very proud of them, these same diagrams are featured in the metformin paper, the hydroxychloroquine paper, the fluvoxamine paper, these diagrams are always in the main body of the paper. With ivermectin, they pushed it back to the appendix. And again, no explanation why. Right. You started to get the picture of the direction of the decisions, but, it's just worth noting.

ALEX: Okay. Next, ivermectin use widespread in the community. So (skimming) So this is weird. This is super weird and I've, I've dug super deep into this so I want to get my state of mind out, uh, out to the world on this. So the original presentation on August 11, did not mention anything about exclusion for ivermectin use. And this was highlighted, Steve Kirsch, who's on good terms with Mills, also wrote at somewhere where I can't find that article now for the life of me, but I read it at the time that, you know, the, yes, you know, this was a limitation of the trial that did not exclude for ivermectin. And even Mills has been quoted as saying like, yeah, sure. But like, you know, like the, use in the community wasn't that high anyway so it washes out. First of all, if it wasn't that high, then why didn't you put an exclusion criteria? That has to be clarified somehow. Why the hell you didn't just rule it out. It's the obvious thing you do, right? You want to know if your control group is clean. But then when they publish the paper, finally, you look at the exclusion criteria, it does not say ivermectin use. You look at the protocol, it does not say ivermectin use you, you look at the normal places, you would look at them there's no hint that they exclude for use of ivermectin. But in the discussion section, they have this weird paragraph about, kind of a couple of sentences about, of course we extensively screened our patients for ivermectin use for COVID right? These words are, trust me, like workshopped over and over again, to find the right words. And of course we exclude them, et cetera, et cetera, et cetera. Now I've got a number of questions here. First of all, why is this in the discussion on in the inclusion criteria in the paper, right? That's what you put these things. this is not complicated. Secondly, what is this “for COVID” thing, right? If they used ivermectin because it was like a traditional cure for malaria, did they get to go in the control group? Like of course that what, and, in general it's just baffling and the, the weird part about it is that the authors kind of come out, swinging there and they're like, “and I'm sure the next thing you're going to say is that we didn't dose them enough, huh?” No dude, like there's the way we do these things. Like you, you're supposed to put your exclusion criteria and exclusion criteria. How did you know this? Right? Because the forms don't include thick checkbox for, used ivermectin. What they do have, is a place to note down con-committed medications. So we have to accept that the places where they did these trials, which are 12 separate sites, filled in these long forms with the patients and ask them about every medication they took and they filled in everything. And then, in the particular medication box that maybe some patients said yeah, had taken some ivermectin, there is an indication like there's a reason why you used ivermectin and the, in there they filled in COVID, right?

ALEX: So let me, summarize this for you in a, in a way that it'll make sense. These people were not able to get how long it was from symptom onset for 23% of their patients. They couldn't get an answer, or at least they don't seem to know the answer for “how long, since you’ve had symptoms?” They're missing ages of patients. Right? And you're telling me that they filled in all of this that went to all the con-committed medications. They found that that whoever was using ivermectin, and the reason why, and then they excluded those, right? My sense is that, and this is why this “for COVID” thing is there, is because even if some had written ivermectin, they just didn't fill in the reason, and therefore they could, the, authors here can claim that, well, it was for, you know, it was for parasites and it's probably a low dose and was probably like twice a year or whatever. So it doesn't matter. But that does not mean extensive screening. Somebody had said actually that, maybe they went afterwards and called everybody after this was raised as an issue. But the number of patients cited in the paper is exactly the same number of patients cited on August 11. So if they did that, they didn't exclude anybody. And the other thing that I find really weird is that Mills, when he talks about this, it's like, well, you know, they use in the community, wasn't that high anyway. Here's the problem with that. First of all, I have a publication from the area, from Brazil, that is, at the time exactly the time of the trial was ongoing. Saying that ivermectin use a shot up nine times, nine times nine X. Uh, and secondly, that publication is not pro ivermectin. It actually talks about like, oh, we're freaking out about the, safety issues or whatever. Right. So this is not like some, you know, thing that you could say like, okay, well, these guys are clearly like biased. Uh they're they're trying to like repeat a trial or something. This is just the normal, Brazilian website. You can put it on Google translate and it'll show you straight up, it says nine times increase in use. Now, Mills does not know this. You might say like, okay, it's not his obligation to know this. Yeah. But if he had actually extensively screened, and he had actually excluded a bunch of patients, he would have seen the same thing. He would have seen a big mass of patients taking ivermectin.The fact that he didn't see it, now we are forced to compare. It's like, okay, is this website lying for some reason? Can we get to the underlying data from like, from, you know, the marketing of see how the sales were going at that time? And if they were high, why didn't they see them in the, in the trial? Like, you know, there's a lot of questions here.

ALEX: Anyway, I just thought of another explanation, right? Maybe the people who did get ivermectin did so much better, that they were fine and then they didn't go to the trial. So that's confounding in a, in a different way. That may not be insignificant actually. Let's say ivermectin works for a subset of people.

MATHEW: Can I jump in real quick?

ALEX: Yeah yeah please. Go ahead.

MATHEW: This sounds like informative censoring and the fact that they would run the trial in the same location. So, okay. Informative censoring is, um, is what took place in the day in Dagan trial. The, the Dagan study on vaccines in Israel, where they had, um, what they call rolling cohorts, where people in the study were sometimes measured as if they were un-vaccinated and measured as if they were vaccinated. And what this does is it creates, a situation where some people are measured more completely as if they, they ran the measurement to exhaustion. And some people are not. And so like in the Dagan trial, you had all these people who were vaccinated, who died at a point later than was measured. But that death was literally left out of the computations.

ALEX: Oh right. This is kind of the same kind of 28 day follow-up stuff. Right. Where they're like, if you're down in day 30, it doesn't count.

MATHEW: Exactly because they were, they were no longer matched with a person who died, who was in the placebo arm, then, uh, their period of observation was cut off. And if what you have is a period of observation, that's cut off prior, that's the same thing. It's still informative censorship. And when you do that kind of informative censorship, the standard is that you have to run a sensitivity analysis before you make your claims. All these types of issues are exactly why I think that, that Bayesian estimates usually like the reality is that they usually move toward the extremes and, anything that, that does that in Bayesian analysis, you should have a list when you're done with the Bayesian analysis, like things that would, that would move the curve.

ALEX: Right?

MATHEW: And if they're all, if they're all going to move the curve in the same direction, that's where things look really suspect. This has actually, I suggested a note on, um, a blog post that Norman Fenton just wrote up. Um, on this basis, he was actually looking at the Sheldrick attack on Merrick and Fenton ran a, um, a, uh, Bayesian analysis on whether or not those, you know, what, what is the probability of fraud, but all of the things that you could list that did not go into the patient analysis, all move that probability in the same direction. So I said, Hey, just mention that this is a ceiling on the probability. In this case for this study, it should be a floor on the probability is what it sounds like.

ALEX: Yeah, exactly. That's a very good, that's a very good way to put it. Especially given some of the other items we're going to talk about.

ALEX: Anyway. And by the way, just to mention like, when we say like, oh, you know, ivermectin sales shut up in the area, this was the official recommended, uh, treatment by the government for COVID, right. Like in Brazil, that moment in time, there was this thing called the COVID kit, very politically contentious. Not everybody took it. Actually, the numbers I've seen is about 25% of the people took it. The establishment hated it, but it was the, you know, if we're looking at what was the recommended treatment by the Brazilian government. they gave you the whole panel. It was ivermectin, hydroxychoroquine, erythromycin I believe. And a few other things. So, it's not unthinkable that it'll be getting used given that, you know, the government was saying use it. And the fact they kind of just added this note in the discussion that yeah. Yeah, sure, sure. We, of course we excluded what it tells me is that they probably did a check after the fact and they constructed a, description of the situation that would allow them to justify their prior results, without excluding any patients. But in reality, they didn't question as they should have and they did not, exclude as it should have. That's my sense. But, again, if I could see the data, I would have a much better understanding and of course we don't get data.

ALEX: Anyway. Okay. The next one. I'll get really worked up here because I really think this is important separately from everything else, but I do think it ties into the story. Single dose recruiting continued after change. So this is when I dug up. I was looking at their applications to the Brazilian ethics committee for the protocol, right? So the, the trial began, the ivermectin part, on January 20th. The protocol they sent to the Brazilians in order to, reset to the ivermectin arm from low-high to high-dose, the protocol itself is dated February 15th and in fact, this protocol is what is attached to the paper. If you go to the protocol thing, you can see that the, at the bottom, it says working paper, February 15th. Okay. February 15th, however of the low-dose arm, had only recruited 19 patients as far as I can tell. The rest of the patients, 59 patients, were recruited in the low-dose arm after they were clearly intending to, reset the trial. And that, I believe, I assume it was because they thought the dose was too low. So why are you recruiting patients to an arm of a trial that you've already decided to terminate so that the data is going to be thrown away? And presumably because you believe that dose is too low, so you're not going to help these patients. 59 patients, right? If we believe the fluvoxamine data, which these patients could have been allocated to, you know, somebody statistically died because of this and it's not, it's not okay at all. And there's no explanation, right? Again, normally what happens is the DSMC terminates the arm. A new protocol is written up. The request for authorization are sent. You get the authorization back and you start over. Here, we don't know what the DSMC said. There's no mention of any decision, but there's a new protocol that appears just a few weeks into the first arm of the low-dose arm of ivermectin. They continue the low-dose arm for quite a while. And then they terminate it on a day of March 4th. We don't know why that date was chosen. Then they get back the response on March 15th. They say that they got it back on the March 21st, which is like, why lie about that now? Because the, you can see on the Brazilian website, it says March 15th clearly. But they report that they got the approval back on the March 21st and they started on March 23rd. Right. What this looks like to me is that they had complete control over both when the low-dose arm ended and when the high-dose arm started. Not good. But also like we can talk about messing with the data, but this is real people who were allocating to an arm the appear to be, you know, th th th the people that are running the trial is set themselves, did not believe it will help people, and, even if they did, the data that they would extract from this, uh, they did not intend to use. I think this is like, beyond the manipulation element that it highlights, I think it, it's, it's a massive ethical lapse, and I don't know where the board was at that time. Yeah. I think, I think this is probably the one that, I mean, arguably there's others that are, worse, you know, in, in effect to the real world, but this is like actually 59 real people that I can sort of visualize in my head, and it upsets me, I think, a lot more than the other ones.

ALEX: Anyway, next one per-protocol population different to the compared contemporary fluvoxamine arm. I think this is because, the per-protocol definition is different, right? So the per-protocol definition in the fluvoxamine arm is just, did they follow the protocol whatever that was? Whereas in the ivermectin arm it was, did they take the 3-dose placebo? And therefore, the numbers are very different because it's like 92% adherence in the fluvoxamine arm and 42% adherence. But I think this is a matter of per-protocol, not meaning per-protocol. Not meaning the same thing in these two trials. So that's bad. But I, I don't think it goes deeper than different definitions, which is, which is not a good thing by the way, just to be clear.

ALEX: The next one: time of onset required for inclusion missing for 317 patients. This is the same 317 patients we talked about with Michelle before. So these patients not only did they do extremely well—weird—not only where are they missing from the subgroup analysis because they didn't know the time from onset, there's a real question here of how the hell did you put them in the trial to begin with if you didn't know how long since symptoms? You claim that they have to be at most seven days from symptoms to be added to the trial. So if you don't know that answer, now, what might have been is that the maybe vouch that it's like less than seven days, but I don't know how much. So they get like past the binary hurdle, but not with enough precision. But again, this, this requires explanation. And of course there's like missing figures for BMI, et cetera, et cetera.

ALEX: Conflicting co-morbidity counts. This is a really fascinating one and I, and I've got a lot more work to do on it because I've started digging into it and it's, it's baffling. So what they're saying is basically like if you sort of understand how the, how the patients are structured in the trial, the ivermectin placebo arm is a, is a clean subset, almost completely a clean subset of the fluvoxamine placebo arm. Right? So like 99% basically of the placebo patients in the, in the ivermectin arm must be also patients in the fluvoxamine placebo arm. Now, because this is the whole point of this trial, by the way, right? Like the reason why your patient are you running all these arms at the same time. And you're trying to sort of spin plates is to share the placebo arm, and there's an ethical argument for this, right? You get to put fewer patients at risk. And there's a financial argument for this. You can get to use the same money to learn more. Great. I'm just saying that it's not something strange that the placebo arm was shared in principle. However, when they talk about co-morbidities right, the fluvoxamine arm shows 16 patients with asthma. The ivermectin arm shows 60 patients with asthma. It's like, okay, it can't be more, right. Has to be less. And there's other numbers like that where the co-morbidities don't match. Right. So then you're like, okay, was it the same arm? Or was it not. Because if it wasn't then how did you randomize it? You know, that opens up a lot more questions. Maybe there's an answer here. I don't, I don't know what it is.