Hello, World!

Had you told me my first Substack post would be written “contra Scott Alexander” I would have called you a crazy person. In fact, it’s particularly odd, given that my activation on Twitter during this pandemic was specifically *because* of Scott Alexander. This was at the time the NYT was threatening to unmask his identity, something so pointlessly cruel it threw me off balance for a week or so.

My admiration for Scott goes way way back. I came across him when he was writing as Yvain in a community called LessWrong. That’s a community dedicated to human rationality and AI risk, since before it was cool. One of the most formative experiences I had there involved an article asking us to take a position on the (then ongoing) case of Amanda Knox. The rationalist community was able largely to deduce that Amanda was innocent, even though the press and public sentiment had been turned largely against her by a prosecutor who would stop at nothing to get another scalp.

The amount of procedural boundaries broken was baffling. From accusing Amanda of instigating a satanic sex ritual, to, I kid you not, pushing a real-time PCR test far beyond any reasonable cycle threshold to “find” the victim’s blood on a knife in Amanda’s boyfriend’s house. The lesson I took away has never abandoned me. Not only can people in power be badly mistaken in matters pertaining to their fields of expertise, but internet communities can dig into a situation deep enough to figure out in real-time that said people in power are wrong.

As the pandemic rolled on, I started writing more and more on Twitter, on things such as narrative reversals in the recent past, where the “respectable” opinion was obviously wrong in retrospect. I dug into the history of the lab leak hypothesis to uncover several uncouth connections. I mention all this so that the unsuspecting reader understands where my skepticism for establishment narratives comes from. One can only see so many of them collapse like a house of cards before one develops a generalized suspicion of anything that comes down from the top.

Thankfully, Scott’s NYT adventure ended well (at least for us, I hope for him too) and he’s back, sharing his broad and deep interests on his Substack.

And then, about a week ago, our worlds collided as Scott wrote on a topic I had been tracking closely: Ivermectin: Much More Than You Wanted To Know.

Summarily Steelmanning Scott

One of the traditions coming out of the rationalist community is called “steelmanning”. It involves taking the opposing argument and articulating it well enough that they themselves would agree that you have done it justice. So here’s what I see Scott saying in his piece, as a form of steelman summary:

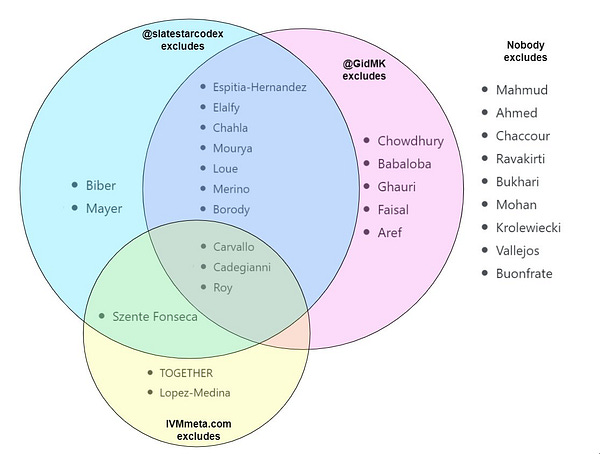

Starting from the ivmmeta.com list of 29 early treatment studies on ivermectin, he goes through each and evaluates its validity based on a range of criteria, concluding that 13 of the studies look untrustworthy for a range of reasons:

2 for fraud

1 for severe preregistration violations

10 for methodological problems

He then excludes another 5 studies because epidemiologist Gideon Meyerowitz-Katz was suspicious of them for a combination of serious statistical errors and small red flags adding up. This leaves Scott with 11 studies that neither him nor Gideon Meyerowitz-Katz have excluded from their analysis. Scott analyzes these 11 studies and finds a not-statistically-significant effect. When he changes the endpoint he used for each study, he ends up finding a borderline statistically significant effect, but he is suspicious of that significance because he had to follow a non-principled path to get there.

He then moves on to the synthesis part of his piece, where he reveals that while he sees some kind of signal in the noise, he has been made aware of a hypothesis that would explain this signal in a more parsimonious way. That hypothesis is that infection by Strongyloides stercoralis, basically a kind of intestinal roundworm, may explain what we see in the studies. There are a few different mechanisms that could be in play, but the most well supported one is that COVID patients, especially in ICU but sometimes earlier, have been getting corticosteroids as part of their treatment. Normally, those patients should be given ivermectin before the steroids, to avoid what is called “worm hyperinfection” where the effect of the steroids is to actually modulate the immune system in a way that helps the roundworms escape its containment and kill the host.

However, in some of the ivermectin studies, there is cause to suspect that ivermectin wasn’t given, to avoid polluting the control, therefore triggering some cases of hyperinfection. MD Avi Bitterman has produced analysis that aims to demonstrate that the studies showing mortality benefit for ivermectin correlate significantly with prevalence of these roundworms, explaining any mortality benefit. Bitterman has also produced analysis suggesting that other effects, such as viral replication benefits of ivermectin, are the result of simple publication bias.

Scott finds this analysis at least 50% convincing, pronouncing his current position to be that to the extent that there is some signal in the Ivermectin studies, this is likely explained by the Worms hypothesis.

He then proceeds to describe a number of takeaways that I won’t get into, as my response focuses on the core of the analysis described in the summary above.

Trust No One

Reading the article, I was surprised (but not really) by the central role Gideon Meyerowitz-Katz (henceforth GidMK as is his much shorter Twitter handle) had played in the analysis. The man has a way to apparate wherever a person of influence takes a position on ivermectin. This is at least the third time I’ve observed the same sequence of events.

In particular, while Scott’s exclusions look reasonable and even-handed, the 5 papers that Scott excluded *because* of GidMK look to be either big and positive studies, or small and *very* positive. Being highly suspicious that these exclusions by GidMK had shifted the analysis entirely, but not wanting to let that prevent me from seeing something that was true, I decided to tie myself to the mast. I actually declared my intention to do a triple exclusion analysis, to see what the studies accepted by Scott Alexander, GidMK, and ivmmeta.com show, as a consensus set of three opinions that come from very different places.

The next morning, I added more details about the experiment I was intending to run. I wanted to see what Scott’s exclusions would do on their own, what GidMK’s exclusions did when added to Scott’s exclusions, and what the ivmmeta.com exclusions would do when added to Scott’s exclusions, as well as when added to Scott’s and GidMK’s exclusions. That last one was the originally promised “triple exclusion” analysis. In short, I wanted to see these 5 analyses:

I pulled some favors, and I have fascinating results to share.

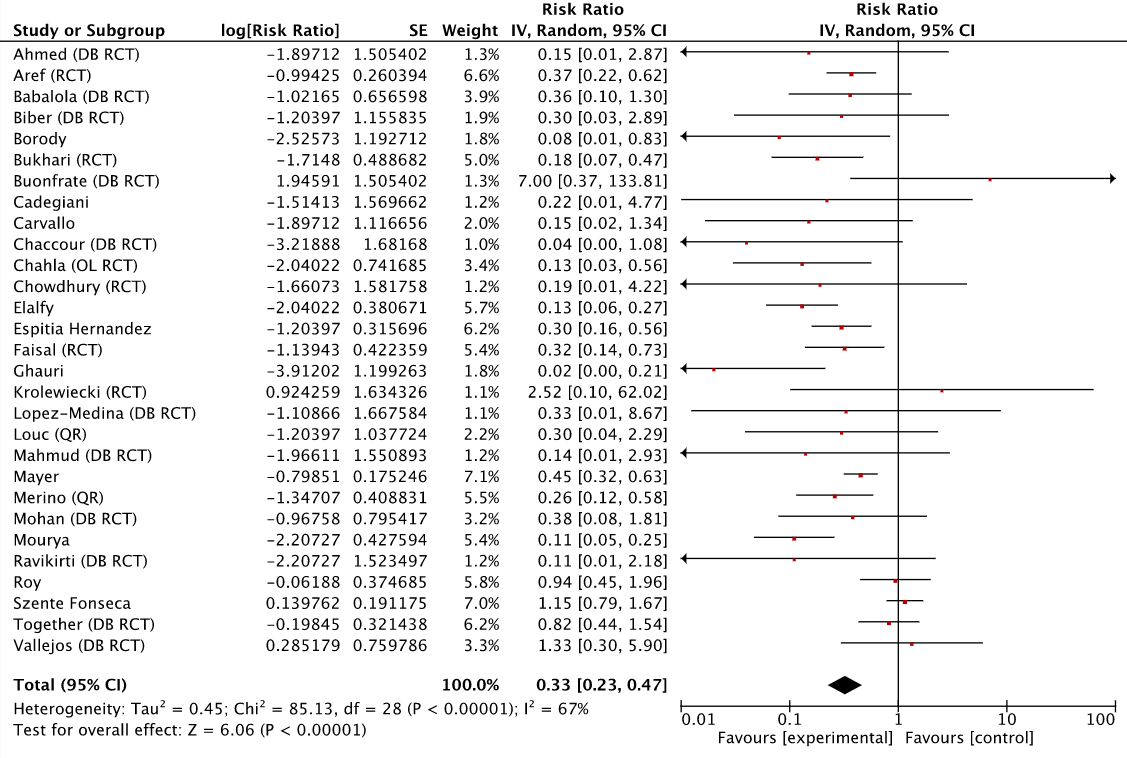

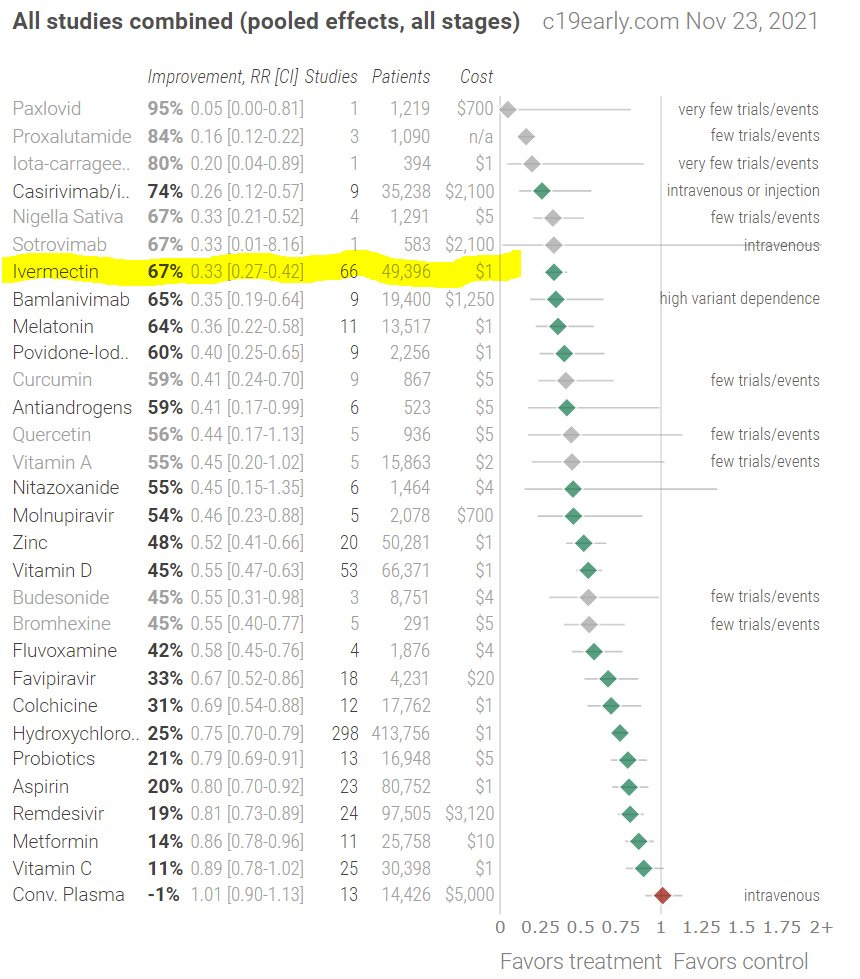

To start, here’s the complete set of early treatment ivermectin studies as presented at ivmmeta.com, coming to roughly the same result. Early treatment with ivermectin produces 67% improvement, or, as RevMan outputs it, has a risk ratio (RR) of 0.33 with a confidence interval (CI) of 0.23-0.47:

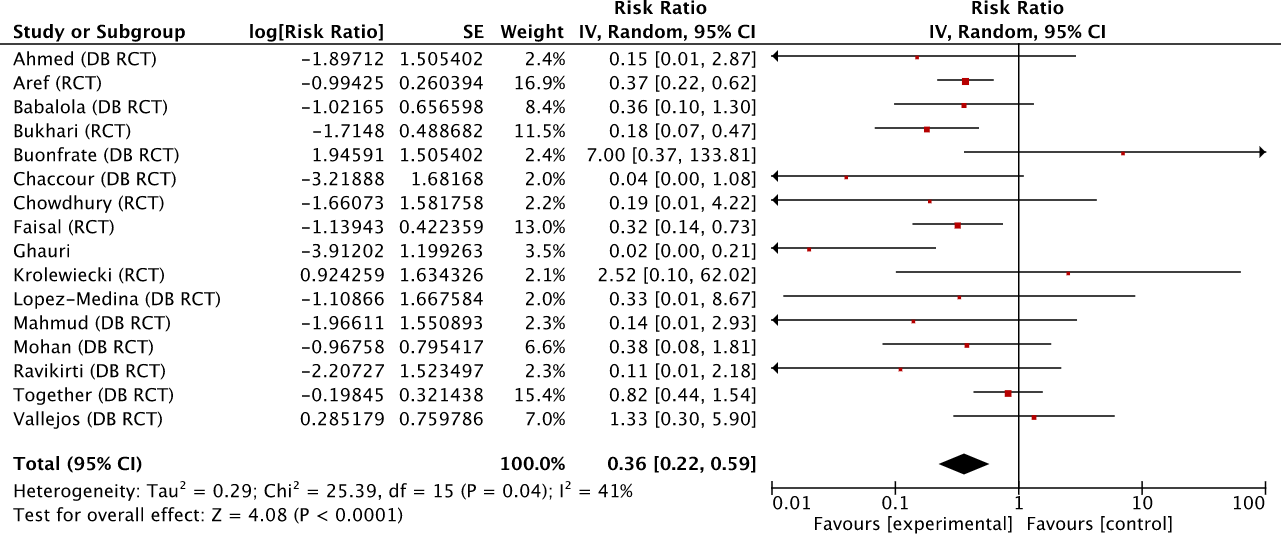

We then move on to the set of studies after Scott’s exclusions. Notice that the risk ratio (RR) barely moves (0.36 or 64% improvement), though the confidence interval widens, if only a bit.

For brevity I’ll spare you the third analysis which I will summarize below, and move on to the fourth. Let’s see what happens when we remove the studies recommended for exclusion by GidMK.

The difference is far larger than the one caused by Scott Alexander’s exclusions. The risk ratio moves to 0.45 (or, 55% improvement). About 9% of the effect of ivermectin evaporates, and the CI is stretched all the way to 0.94. For those of you into P-values (which don’t mean what the vast majority thinks they mean), we go from P<0.0001 without GidMK’s exclusions to P<0.03 with them. In other words, the exclusions recommended only by GidMK make the analysis on the remaining set of studies far more uncertain, though it does stay firmly within what is considered “statistically significant” territory. I’m not sure how Scott ended up with a P<0.15 but the difference is large enough to make me suspect a mathematical error somewhere.

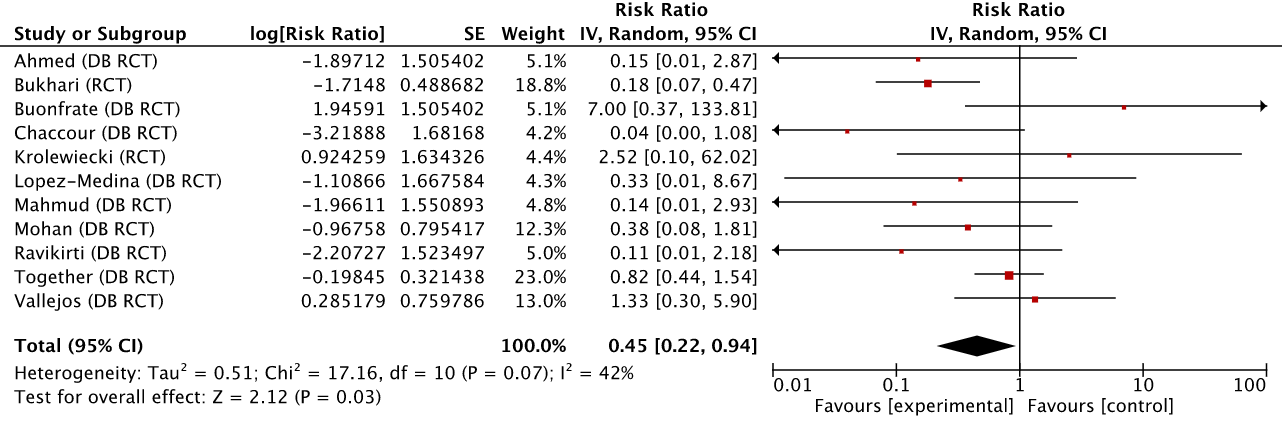

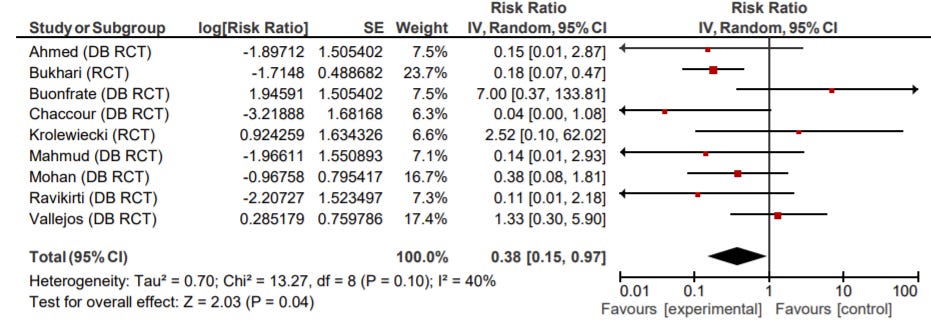

If, in addition, we remove the 2 studies in this set that ivmmeta.com excludes (Lopez-Medina and Together), we are left with the originally promised triple exclusion analysis. The remaining studies are accepted by Scott Alexander, GidMK, and ivmmeta.com.

The resulting Risk Ratio is 0.38 (or 62% improvement), somewhere between the one we started with, and what it was after the GidMK exclusions While the CI has widened, it is remarkable that we are still within the bounds of “statistical significance”. Not because that is the be-all end-all of tests, but because we get to avoid a pointless argument on the meaning of p-values and the bright line fallacy.

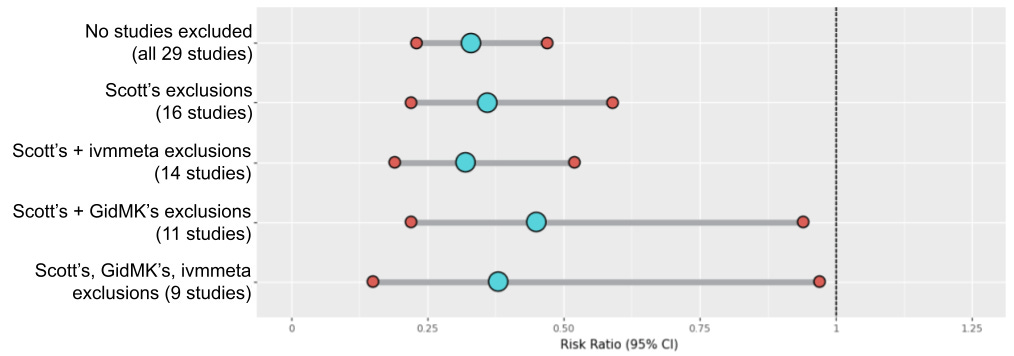

Overall, the 5 pre-announced analyses put together, end up looking something like this:

The way to read this chart, in brief, is that smaller intervals are better, and being more to the left is better. The Risk Ratio, very briefly, is how much better a patient taking the intervention (in this case ivermectin) does at whatever the relevant objective is than the control group. So a RR of 0.33 indicates that the intervention group does 3x better than the control group. ivmmeta.com chooses the most serious endpoint they can find in each study, which I find to be a pragmatic compromise for the kind of bottom-up dataset we’re looking at.

It is incredibly clear that Scott’s own analysis doesn’t actually move the conclusion much from what ivmmeta.com shows even though he excluded almost half the studies, but his trust in GidMK waters down the power of the dataset to the point where the effect starts to look uncertain, even if ivermectin still looks more likely than not to have a significant effect.

When you torture a dataset this much, and it still doesn’t tell you what you want to hear, well, you have to start wondering if maybe the poor dataset *really* believes what it’s telling you. I must admit that I did not expect that after all the exclusions we would still be in commonly accepted as “significant” territory, but them’s the maths.

Nevertheless, none of this should be taken to be an official meta-analysis, or even particularly scientific. What I intended to show, and I think this exercise makes clear, is that Scott’s conclusions are very much dominated by what he excluded because of GidMK. Had he not done so, the conclusion of his analysis would have been very different.

The GidMK phenomenon

I wanted to avoid focusing on GidMK himself, but the way the analysis comes out, this won’t be possible. I’ll try to keep things as dispassionate as possible without being fake, but I beseech the reader to understand that this isn’t your standard “Ad Hominem” argument. If Scott’s analysis depends on his trust on GidMK, it is paramount that I demonstrate that GidMK’s track record is not one deserving of that trust.

Scott mentions the experts having a less-than-optimal record in this pandemic. You can say that again.

But the experts have beclowned themselves again and again throughout this pandemic, from the first stirrings of “anyone who worries about coronavirus reaching the US is dog-whistling anti-Chinese racism”, to the Surgeon-General tweeting “Don’t wear a face mask”, to government campaigns focusing entirely on hand-washing (HEPA filters? What are those?) Not only would a recommendation to trust experts be misleading, I don’t even think you could make it work. People would notice how often the experts were wrong, and your public awareness campaign would come to naught.

So what have Gideon’s takes been on these topics at the time?

I’ll let you draw your own conclusions.

I’ve had prior run-ins with GidMK on Twitter and have found him so reliable at repeating the prevailing “respectable” opinion, that the best way I have to know what that respectable opinion is at any given moment, is to find what GidMK thinks about any given topic. I literally predicted the opinion he would hold at different times based on what I knew the establishment position was at the time, and had basically 100% success. Lab leak? Check. Aerosol transmission? Check. I’m not kidding, try it and see for yourself. I don’t know why this is, but I do know it sets up a win-win. If one does this and is right, they get to claim credit, and if they’re wrong, they get to diffuse responsibility to the unnamed “experts”, never taking a hit to their own credibility.

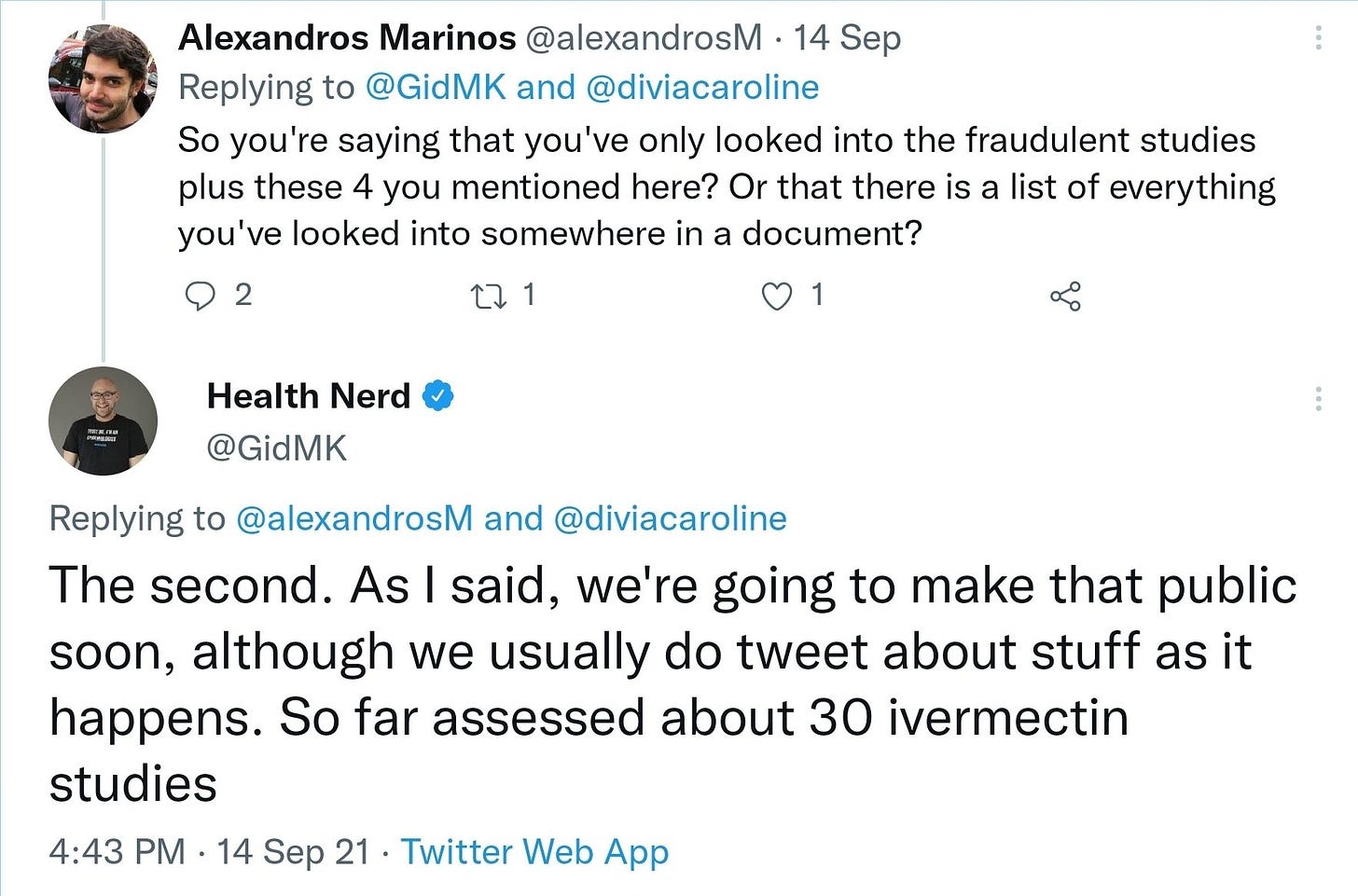

More importantly, he has judged his conclusion on ivermectin far before most of the studies we have today were available, or any of the junk science results he and his colleagues have unearthed and are using today to justify their position. To frame a new promising treatment as something the “COVID cranks” are looking at, and “something else to debunk” back in December 2020, before the work is even really started, puts any trust in his judgement far into forbidden territory. I will still look at any evidence he brings up, and as a dedicated adversary sometimes he finds valid points to make, but that’s as much as I can possibly do.

I must also say, that for a fraud researcher and transparency campaigner, GidMK and his team have been remarkably resistant to release the full list of 26 (or elsewhere “about 30”) studies they have analyzed, a mix of RCT and observational studies, from which they draw their statements on the whole body of literature. It’s now been over 10 weeks since that data was promised to be made public “soon”, but despite numerous requests, said data has not been forthcoming.

One more thing to mention is that a researcher whom I consider very trustworthy, has shown me emails wherein he has shared data and code with one of GidMK’s colleagues over 3 months ago, without any substantial answer or even a simple public acknowledgement of this transparency. Given this, I can’t know how much data has been given to this team and how they prioritize their work, but it is of utmost importance that they come clean about their interactions with the research community, how much data they have, which papers they have investigated, and so on. When one of the members of the team has tweeted the words below, I don’t think it’s unfair to demand they take reasonable precautions against bias in their own work, the same precautions they demand of others.

I have to also note that GidMK is highly conscious of his feed, and is probably applying some automated means of blocking anyone supporting opposing views to his. Not only has he blocked me on Twitter, he seems to have blocked a double-digit percentage of my followers, many of which were not aware of the block until I asked them to check. My best hypothesis is that anyone “liking” anything I posted opposing his positions would automatically get blocked.

Why bring this up? Scott mentions following GidMK’s feed, and it must be made clear that anything seen in that feed is kept “clean” of opposing opinion likely by means of automated blocking of opposing views. This is a technique I’ve seen before in the anti-Tesla community, as a way to mislead journalists into thinking any points made are without opposition, and prevent any conversation with the “pro” side. I don’t say this lightly, and am open to any alternative explanations for the data, though I can’t possibly imagine what they might be.

Bottom-up vs. Top-down

As I was collecting my thoughts on Scott’s article, he actually responded to some of my early thoughts as posted on Twitter. One of the more interesting points he makes is this:

I think the main thing I want to cram into his head is how many pseudosciences that have to be false have really strong empirical literatures behind them. There are dozens of positive double-blind RCTs of homeopathy. I feel like I can explain what went wrong with these about a third of the time. The rest of the time, I’m as boggled as everyone else, and I just accept that the biggest studies by the most careful people usually don’t find effects, plus we should have a low prior on an effect since it’s crazy.

I don’t mind if Scott is saying that RCTs can’t be trusted, or that even a solid meta-analysis of RCTs can’t be trusted, because after all, look at homeopathy. I have sympathy for that position and have little or no argument against it, so long as it’s applied evenly to the top-down and the bottom-up. I am unsure if his skepticism goes as far as to indicate that his mind was made up before he even started his analysis, but I would be interested to understand what threshold the bottom-up evidence would need to hit to be convincing to him.

It’s not like the rest of “official” medicine doesn’t have literally hundreds of reversals happening all the time, which I assume went through “proper channels”.

If we’re not going to adopt complete epistemic nihilism though, we have to accept *some* things. And while I agree that it is implausible that the couple dozen sister websites of ivmmeta.com all point to different cures for COVID, Ivermectin stands out for the number of studies (& patients) combined with the strength of the effect it presents, even while its literature has been scrutinized more than any other.

Does this operate as any sort of guarantee that “it works”? Absolutely not. In a remotely sane world, some country’s public health establishment would have sponsored a trial large enough to dwarf anything we have available, and settle the question for good. In fact, in a sane world, we’d have done the same for many of the substances on this list and probably a few more. This is real evidence that the public health establishment is indeed not acting sanely, and it does weigh on my ability to take them at their word on everything else they say on early treatment and beyond.

Vermis Ex Machina

(I sure hope “worms” are indeed “vermis” in Latin or I’ll never hear the end of it)

So here’s where we are in this debate: Initially, when the Hill and Lawrie meta-analyses were showing positive results for ivermectin, almost nobody paid attention. Then, GidMK and his colleagues started finding fraudulent studies. Some of them show solid evidence of fraud, others sloppiness. I’ll call them “junk studies” for the moment, because I am uncomfortable with the blanket attribution of intent to the researchers. Crucially, as I’ve pointed out to the interested parties, the amount of junk studies found do not exceed the expected baseline for such studies anywhere else in the medical literature.

Despite this, GidMK and his colleagues have consistently made statements that indicate that the ivermectin literature is uniquely fraudulent.

When they are pushed on it, they retreat back to the claim that “we have simply found fraudulent papers” but when speaking freely they make their claim plain: The ivermectin literature is uniquely fraudulent and is not to be trusted as a whole. What Scott has popularized as the motte and bailey doctrine. It is extremely important that we separate the two claims, because I do not challenge that several studies are junk, only that they don’t exceed what we would expect to see.

So after all this back and forth, with the “anti” side never yielding to the fact that there is, indeed, a real signal of effectiveness and that ivermectin should be administered to COVID patients, it is a bit odd to see a study appear that indicates that well, yes, there is a signal, but it is because of mediation by roundworms.

Having been this careful and strict with all the studies Scott has rejected this far in his analysis, I don’t really understand why he jumps onto this conjecture. There are several hypothesized confounders of this analysis, including timing, dosage, background use of ivermectin in the control population (a concern for both the Together trial that did not include ivermectin in its exclusion criteria, and the Lopez-Medina trial that showed blurred vision in the control group in an area of exceedingly high ivermectin use).

In order to check my thinking, I did some basic investigation into the Mahmud trial, and what I saw isn’t that compelling. Serological evidence of Strongyloides stercoralis in city and slum populations in Dhaka, where the trial took place, were at 5% and 22% respectively, and in general serological evidence may indicate past, not present infection. Let’s say 10% of study participants (20/200) had a relevant infection. How many of these 20 made it to the ICU, and what is the likelihood that in any of them lethal worm hyperinfection was the cause of death that would have been otherwise avoided? I also note that this would involve local doctors not knowing this and incorrectly administering steroids in an area where roundworm infection is common, a mistake I would not expect them to have made.

Now, I’m not saying that the hypothesis is false. What I *am* saying is that it is too new, and that there are way too many questions to be latching onto it as the be-all end-all explanation. Bitterman’s comments to me that “he just has to explain 20 deaths” in a Twitter Spaces exchange with a relatively large audience present was also indicative to me that he sees his work as part of the broader fight on ivermectin, not as an independent scientific investigation. All in all, I’ll pass this back to Scott who wrote the ultimate rebuttal here, with beware the man of one study. The worms hypothesis should be studied, but we are nowhere near far enough in the process to be elevating it into any sort of definitive explanation for anything.

The same caveats should be applied to Bitterman’s “publication bias” analysis explaining away of viral load results.

The Bermuda Triangulation

Scott had another extremely valuable quote in his response. Commenting on me saying that we’re looking at a top/down vs. bottom/up sensemaking conflict, and that the approach taken necessarily benefits the top/down perspective, he writes:

I admit this is true and it sucks. I have no solution for it right now. I think of it as like the Large Hadron Collider. If the people who run the LHC ever become biased, we’re doomed, because there’s no way ordinary citizens can pool all of our small hadron colliders and get equally robust results. It’s just an unfair advantage that you get if you can afford a seventeen-mile long tunnel under Switzerland full of giant magnets.

We don’t need to wonder if big pharmaceutical companies and the public health establishment are biased though. We’d have to discard everything we know about behavioral economics, capitalism, organizational design, and a couple dozen other disciplines to avoid knowing that fact. The system must work *in spite* of that. We should not have to trust the integrity of people working there. If this all comes down to trusting those who, in Scott’s words, have “beclowned themselves”, we’re quite simply in deep trouble.

Also, his LHC argument relies on us not knowing about the hundreds of reversals mentioned earlier. Physics, chemistry, mathematics, and other similar fields don’t demonstrate anywhere near the same level of flimsiness to my knowledge. Sadly, in medicine, when it comes to mandating stricter and stricter rules of evidence, there seems to be no such thing as a free lunch. As the cost of a study goes higher, so does the motivation and sophistication of the players willing to find ever more subtle ways to game the system.

I also question whether this is the sort of problem that only a massive collider (RCTs) can solve, and that there is no other way around it. RCTs are a tool like any other, with deep flaws. But as Goodhart’s law teaches us, any metric that becomes a target will cease to be a good metric. The evidence coming out of a pre-announced formula engaged by an intelligent opponent must be highly suspect of being compromised when billions of dollars are at stake. If we believe a superintelligent AI could find a way to reliably subvert RCTs to get its desired outcomes in a short period of time, then the only question is whether a big pharmaceutical company has enough brain power to accomplish something similar after decades of trying. That would certainly explain the reversals.

Not all is lost though. Biology has solved this problem before we knew it existed, and we ourselves have solved it over and over again in areas other than medicine. The solution to sensory monoculture is the exact opposite: cultivating sensory pluralism.

It comes with many names. Robotics calls it “sensor fusion”, hedge funds call it using “alternative data”, evolutionary psychology calls it “nomological networks of cumulative evidence”, social sciences call it “methodological triangulation”, astronomers call it “multi-messenger astronomy”, in biology it’s called “multisensory integration”, and in vision it’s called “parallax”.

The idea itself is very simple, though it took me a decade to absorb. If you’re worried about implementation issues in your sensors, use many different sensors. If you’re seeing something, your eyes may be playing tricks on you. But if you’re seeing, smelling, touching, and tasting something, well, at that point it’s not an artifact of your senses. It would be extraordinarily unlikely (read: impossible) for all your senses to misfire in the same way at the same time without some unifying cause, either in your brain, or in the world.

Smart people like Daniel Schmachtenberger and Tom Beakbane call it Consilience.

What does this have to do with ivermectin? Well, here’s how I process the state of the evidence. There are several different lines of evidence pointing us in the direction that ivermectin helps significantly with COVID. Any of those being true is sufficient, and several of them could be wrong, but the conclusion would still hold. This is how I like my theses, supported by multiple independent lines of evidence.

The way I understand the counter-argument is this: focus on the most “prestigious” signal, and work hard to chip away at it. First, remove several studies because of form or style issues. Then, trust GidMK and remove even more studies. Then, indicate that there is *unusual* levels of fraud in the findings that *are* significant (a mistake Scott hasn’t made, but others constantly do). Then, explain away what’s left with a brand new hypothesis about worms. Then, explain away viral replication results with an ad-hoc analysis indicating publication bias. Then, explain away what’s left after *that*, as *not statistically significant*. Finally, somehow use the tower of arguments you’ve constructed, to indicate that all other lines of evidence are similarly suspect and should be discarded.

Notice something though: This settles into a *tower* of arguments. Many of them initially attempted to be the complete answer, but by now are all coming together to form a single, composite explanation. All of them, or almost all of them, have to work to explain away the signal. It’s not enough for a couple of them to be right, because there’s just too much signal to explain away. And while I give some of those arguments plausibility, others have very compelling counter-arguments, ones that don’t seem to be getting evaluated properly.

In short, I like support for my positions to be linked mostly with OR operators, and am suspicious of arguments held together by AND operators. Consider this a rephrasing of Occam’s Razor. This is probably where I should detail the various arguments in favor of ivermectin, but for one, this article has gone on long enough, and for another, I’m probably not the best person to write it.

Does this mean it is impossible that I have the wrong idea? Absolutely not. I can see how the pandemic, and the dozens of flame wars it has caused, may have hardened my view. This is why I chose to let Scott’s article sit with me for a while, why I pre-declared the analysis I was going to do, and why I made sure to do as much work as possible to articulate my view, so that flaws with it can be identified. If significant issues with the above are found, I commit to correcting and updating this analysis, even if the conclusion is reversed.

Do Your Own Research

Scott introduced his response to me thus:

Alexandros Marinos is the most thoughtful and dedicated ivermectin proponent I know of

I take it as being a well-intended comment, and I understand that the net effect of my work is a defense of ivermectin. But I don’t see myself as an ivermectin proponent, mostly because I have no real way to know if it “works”. I’m not a biologist, medical doctor, or statistician. What I am a proponent of is epistemic honesty and balanced evaluation of evidence on all sides, and what I do know is that the structure of the arguments made against ivermectin look highly suspect. I’ve gone in depth on most if not all of them and they are a cornucopia of bad facts and bad logic. Scott’s article is the best of the genre, as it is not obviously riddled with both, if at the cost of complexity of explanation. It must be said that Scott’s article also stands out for its good faith and civility of engagement, which makes it easier to respond to. It’s far harder to parse bad faith arguments and indirection, and for this I am grateful to him.

Perhaps life is this complex, and this is the complexity required to explain what we’re looking at in this case. Even if ivermectin isn’t actually effective, I’d expect the explanation for the signal we see to be far simpler. If we have to apply a level of explanatory pressure that would collapse any other evidence base, and the evidence base is still holding on, then we might be looking at an isolated demand for rigor.

Overall though, me and Scott agree on the most important point. Experts cannot be blindly trusted. That just can’t be the way forward. And while I’m sure that my proposed solutions to the sensemaking catastrophe we have been witnessing over the last few years are not without downside, I really don’t see another way out than more activation of the bottom-up dynamic.

This is why this blog is called Do Your Own Research. People should trust others to an extent, but they should also frequently verify to the extent possible, and that applies to everyone, including me. We can’t hope to have a sane information ecology without widespread verification and discussion, or even generation of novel results. I hope not to have to write pieces of this length very often, but when I do, I expect all of you to be there to tell me all the ways I’m wrong.

And with this, I expect no less than to be slaughtered in the comments. Have at it! You will only make me stronger.

As a Twitter follower of just about everyone mentioned in this post and someone who is just trying to make sense of the data on promising early treatment options for Covid, I applaud you for this write-up. The combination of intellectual rigor, unemotional review of the data, and most of all the tone of respect in communication is admirable. We need more of this everywhere. Thank you for your efforts.

If the side effects are non-existent and/or manageable, and the only effect is placebo, it would be worth it for that effect alone, IMO.

But yes, the lack of large-scale IVM or HCQ trial by the many governments willing to spend $Bs on unproven vaccines flies in the face of ... well, sanity, to be honest.

There would be more than enough volunteers, that much is obvious.